This is the last in series of posts about rapid prototyping for game development with high school students. I will use one of our games, Teen Angst, as a case study for what to do if things don’t go according to plan.

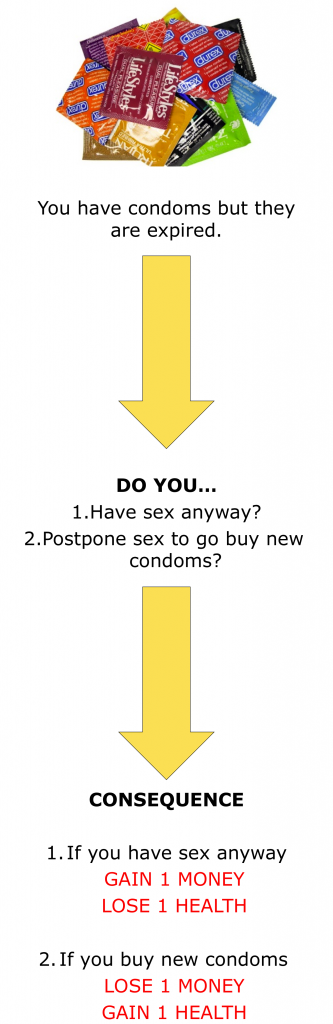

Teen Angst had the broadest scope of any of our games. The plan was to use game mechanics to shape decision making about three topics that most interested teenagers: relationships, substance abuse, and nutrition. Players answered a series of questions based on scenarios that were presented via PowerPoint (Figure 1). Points for four resources (Health, IQ, Friends, and Money) were gained or lost depending on the most likely consequences of the decision.

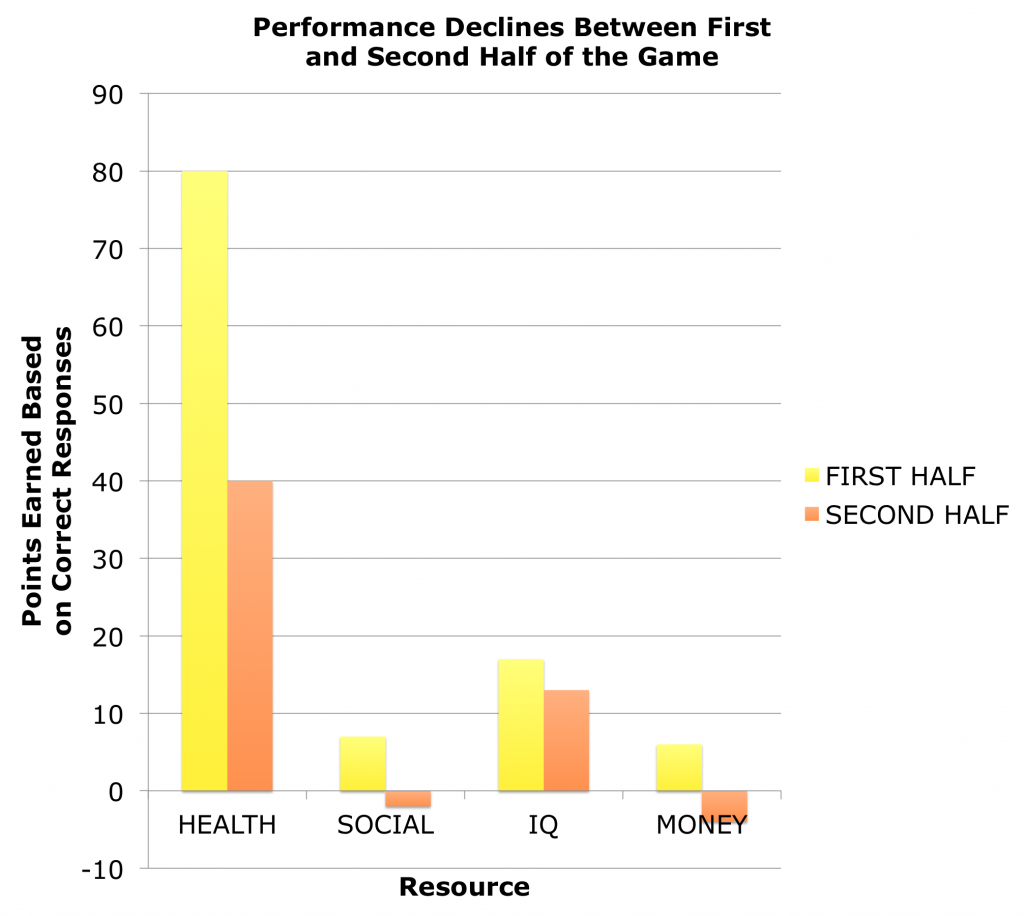

There were several design flaws in the game. First, there was no means of controlling flow. Task difficulty was not adjusted based on performance, which typically results in either boredom or frustration for the player. Second, we adopted a linear narrative that limited choices to only a few options. While the psychology literature indicates that too many choices can paralyze a person with indecision, the game world is full of examples where presenting players with more choices increases their engagement with and enjoyment of the game. In the game world, having a choice means the player is in control of the outcome, and thus they are more likely to engage with the system. As educators we should capitalize on this phenomenon and reconcile it with what we already know – ownership of the learning experience is critical to learning outcomes. Third, the game was unbalanced. In a perfectly balanced game, all the probable outcomes have an equal likelihood of occurring. A perfectly balanced game (e.g., “tic-tac-toe” or “rock-paper-scissors”) is also called a zero-sum game because opponents have an equal opportunity to win. The reward-punishment contingencies in a game, the player resources, or other factors that affect the final outcome can also be out of balance. In our game, the reward-punishment contingencies were not evenly distributed across resources. Even though the number of questions pertaining to the topics and resources were balanced, the point allocation for each topic-resource combination was not balanced. For example, players had more opportunities to gather resources for Health than the other resources.

We were aware of all of these design issues going into data collection, but we didn’t realize they would have such a strong impact on the data. Six high school students participated in the experiment, but the data for one was removed because the instructions weren’t followed properly. Subjects played the game and provided responses on answer sheets. As I mentioned in a previous post, there was an error in creating the answer sheets, which made it difficult to relate individual answers to their corresponding questions. However, we were able to compare the points earned from the first half of the game to points from the second half. The prediction was that players would earn more points in the second half because of practice effects.

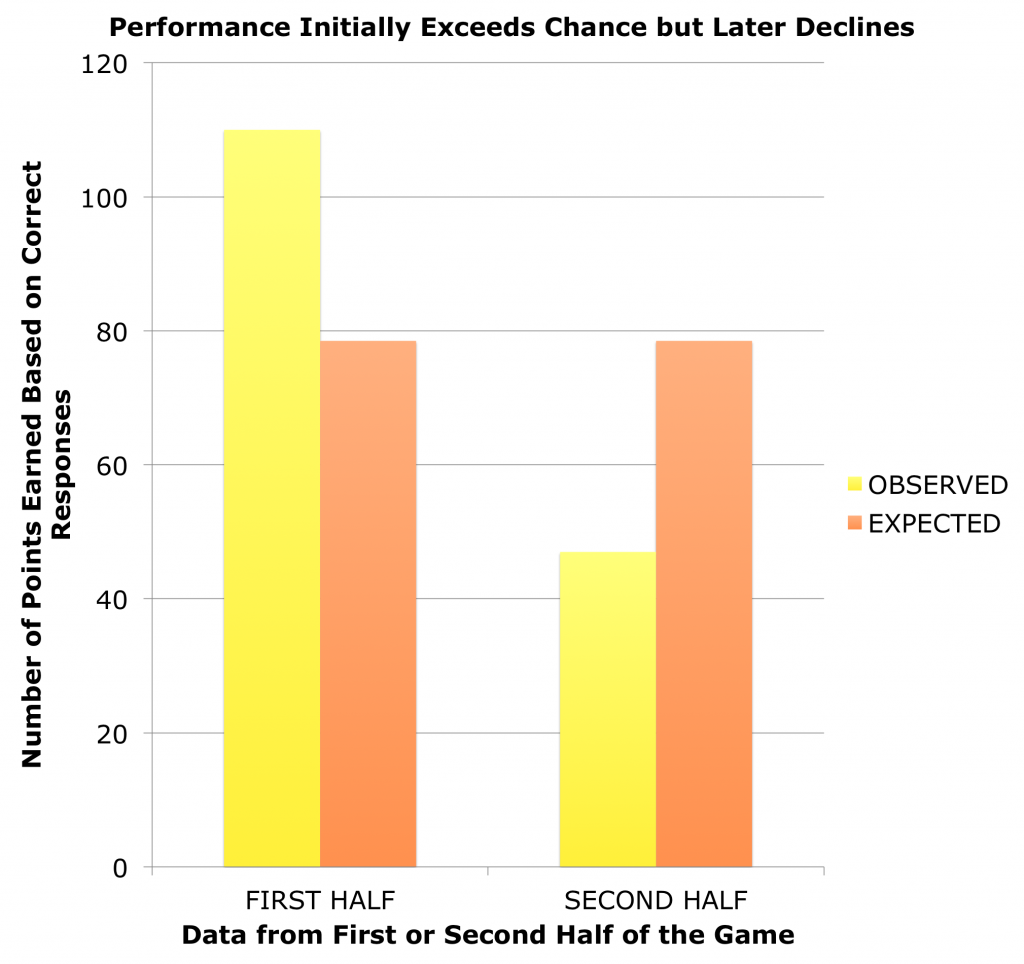

Interestingly, performance exceeded chance levels during the first half of the game (Figure 3), which suggests that subjects were attentive and understood the rules. Nevertheless, contrary to our expectations, performance decreased during the second half of the game (Figure 2). Data were combined across all subjects and all categories (i.e., Relationships, Drugs, and Nutrition). For each resource (i.e., Health, Relationships, IQ and Money), performance during the second half of the game was worse than for the first half of the game, c2 (1, N = 157) = 80.067, p < 0.0001). Similarly, performance during the second half of the game was worse when data were combined across resources (Figure 3), c2 (1, N = 157) = 25.28, p < 0.0001). This decrease in performance might be attributed to fatigue. However, it is more likely that this effect is the result of an imperfect game design. The data were consistent with post-game interviews where the players reported being bored.

After six weeks of hard work, a result like this could be devastating to a student. At worst, the student might doubt the scientific method and loose interest in science. It is critical to spend as much time with the student as possible to confirm that they understand the value of impartiality, learn from failure, and persist in their quest for truth. I found it useful to recount my personal experiences with failed experiments as well as examples from famous scientists. Shifting the focus to improving the game was also helpful. However, it was particularly interesting to find that the student found some solace in knowing that her results were important because they provided evidence for the lab’s overarching hypothesis, namely, that properly employed game mechanics are useful for education. In her case, an imperfect design resulted in a baseline to which future iterations of the game will be compared. We both learned a lot from each other, and the student is sure to benefit from this experience in the future.

Figure 2

Figure 3