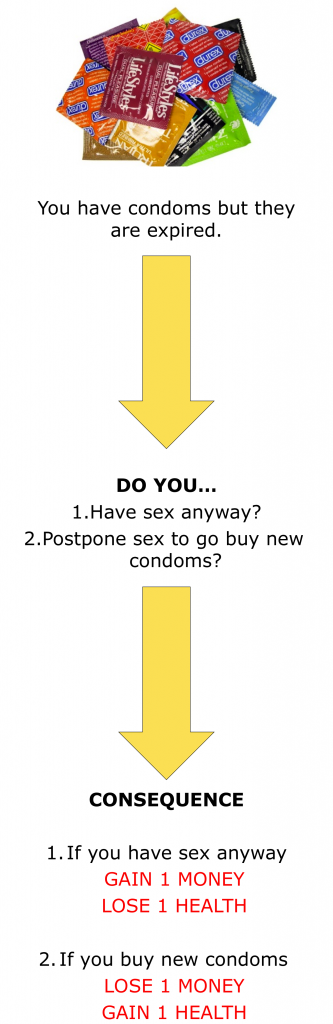

Last year I documented the development of several games designed by high school students and college undergrads in a six-week program sponsored by the Department of Education. Over the course of the summer, I mentored seven students and we produced seven board games for education or … Continue Reading ››

Last year I documented the development of several games designed by high school students and college undergrads in a six-week program sponsored by the Department of Education. Over the course of the summer, I mentored seven students and we produced seven board games for education or … Continue Reading ›› And So it Begins… Again

Last year I documented the development of several games designed by high school students and college undergrads in a six-week program sponsored by the Department of Education. Over the course of the summer, I mentored seven students and we produced seven board games for education or … Continue Reading ››

Last year I documented the development of several games designed by high school students and college undergrads in a six-week program sponsored by the Department of Education. Over the course of the summer, I mentored seven students and we produced seven board games for education or … Continue Reading ››

You must be logged in to post a comment.