And sometimes you get unlucky. Sometimes designing a game or an experiment takes days, weeks, or months of tweaking multiple variables. It’s like flying a helicopter. Move the stick and the other controls have to be adjusted to compensate. As you panic, you and your experiment hurdle to the ground in a screaming ball of flames.

And sometimes you get unlucky. Sometimes designing a game or an experiment takes days, weeks, or months of tweaking multiple variables. It’s like flying a helicopter. Move the stick and the other controls have to be adjusted to compensate. As you panic, you and your experiment hurdle to the ground in a screaming ball of flames.

A student approached me with an interest in racial prejudice and cognitive bias. Both of these topics have been studied in great detail, and there are educational programs designed to teach students about racial stereotypes. However, to my knowledge, there aren’t any games that educate students by exposing them to their own cognitive biases. Consequently, we though it would be good idea to have students participate in a mystery game where they had to find a killer via clues and mug shots. We anticipated that players would demonstrate an in-group bias for their own race** and an out-group bias for other races. Specifically, they were expected to identify other races as the perpetrator more often than members of their own race. They were also expected to identify their own race as victims more often than other races.

We were particularly interested in an effect reported by Hilliar and Kemp (2008). In their experiment, faces were morphed between stereotypical Asian and Caucasian faces, and subjects were more likely to report these morphs as Asian when they were labeled with an Asian name rather than a European name. Unfortunately, every game mechanic we came up with introduced a confounding variable into the experiment. There is an art to identifying confounds and younger students typically lack the experience it takes to find them. As facilitators, we should weed out the confounding variables without destroying the student’s self esteem. My solution is to first notify the student that you are working in parallel on a new design. At this point, the student is already aware there are difficulties with the experiment, so the news is not too surprising. Then, take the best suggestions the student made during lab meetings and write them down. Include as many of these contributions into your design as possible. Graduate students are well accustomed to having their experimental proposals nitpicked in order to make them stronger. However, younger students often feel the war being waged on the experimental design is a personal attack. Because it is more important to keep students interested in science, I recommend keeping the academic lashings to a minimum. Show the student what the problem was, how to improve the design, and let them know their contribution was essential. You don’t want to turn into this guy.

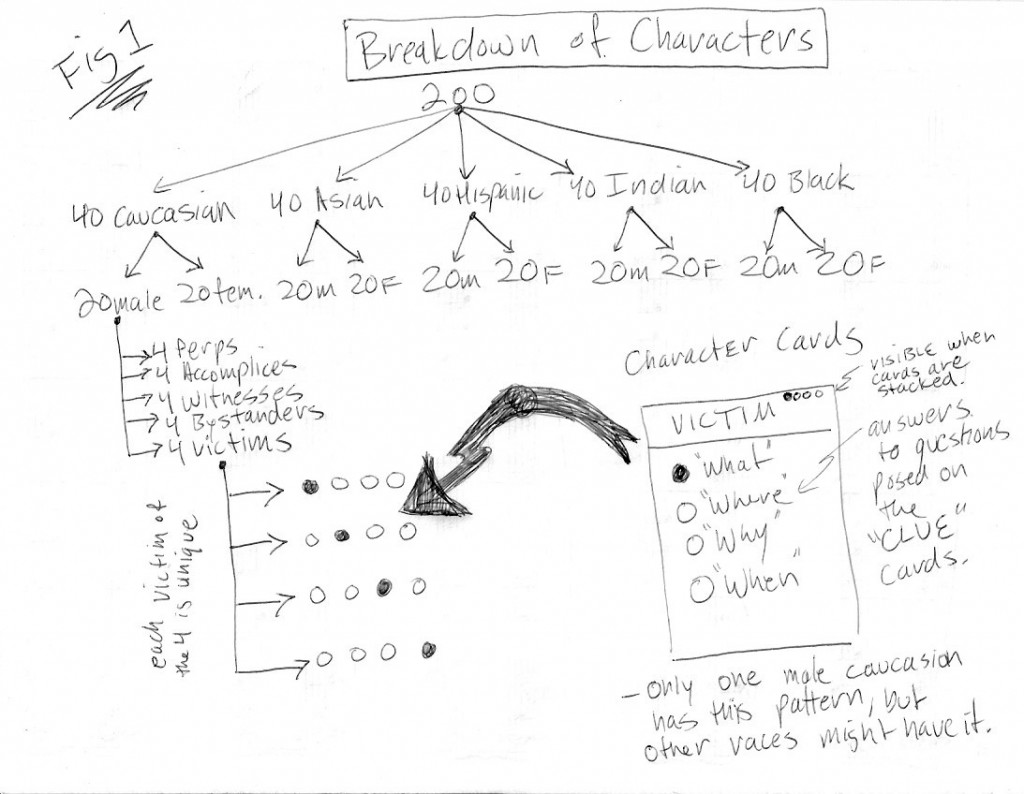

The most critical improvement in the new game was that it now relied upon a more robust experimental paradigm. Rather than using ethnic sounding names and morphed faces, players will make judgments about several faces from five major racial groups attending York College (Caucasian, Asian, Hispanic, Indian, and Black). 40 faces from each racial group will be combined to yield 200 faces (Figure 1). Within each racial group, 20 faces will be male and 20 will be female. The game is still a mystery/who-done-it, and the object of the game is to accurately identify the perpetrator, an accomplice, a witness, an innocent bystander, and the victim. Each of these characters represents an archetype associated with a particular emotional valence, ranking from negative to positive. For example, an accomplice is typically regarded as negative, but not as bad as the perpetrator. Players rank faces in the game using these characterizations similar to a Likert scale. We predict players will more likely identify members of their own race as victims, bystanders, or witness. Conversely, players are expected to identify members of other races as perpetrators or accomplices.

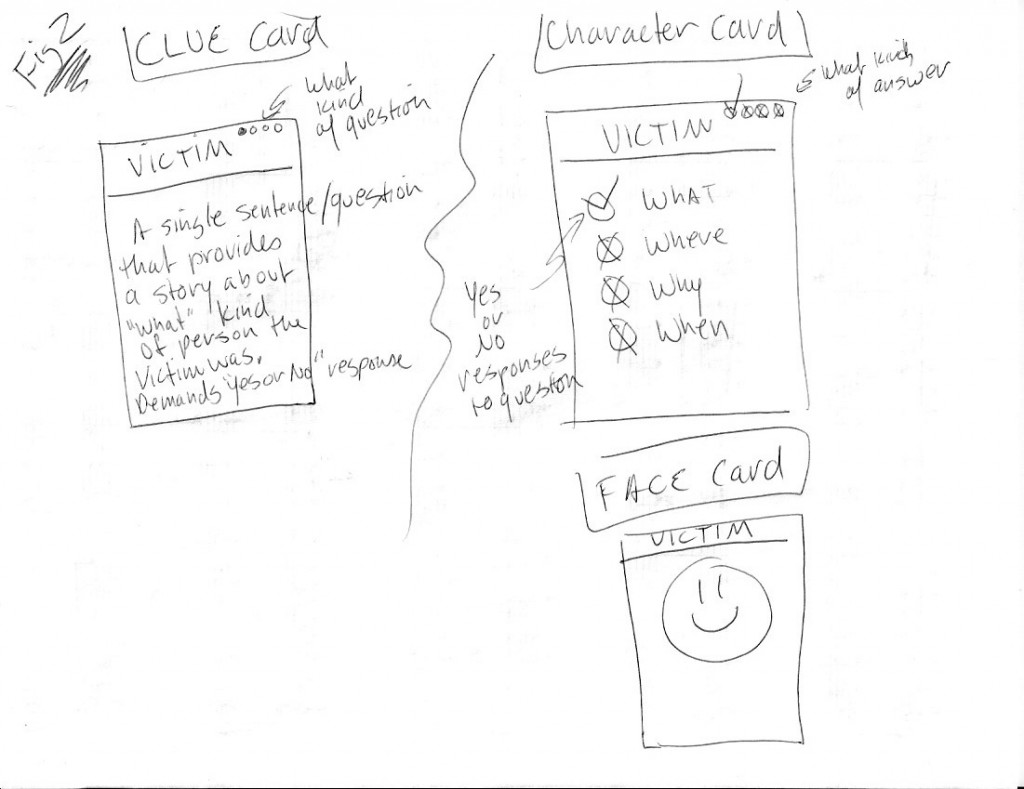

The core mechanic of this game is similar to Mastermind Challenge (a two-player version of the classic Mastermind), Guess Who (a pictorial variation of 20 Questions), and Clue. Like a combination of Mastermind and Guess Who, each player will attempt to identify the five characters that their opponent selected before the start of the game. Opponents are allowed to answer simple “yes-no” questions on each round of play, and players are allowed to adjust their guesses based on this feedback. However, unlike these games, players will be making judgments based on both faces and clues that are revealed on clue cards during each round of play (Figure 2). Face cards are accompanied by character cards, which indicate certain qualities about the character. After 40 rounds of play, the players guess who the five characters are, most likely revealing a racial bias.

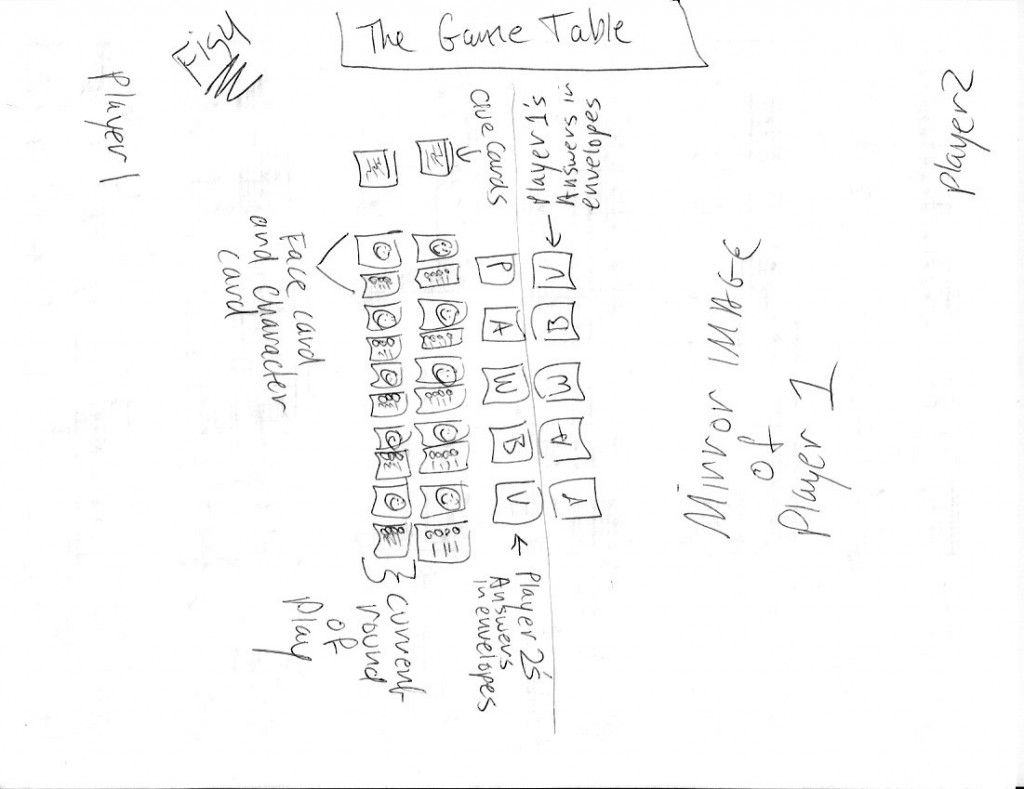

Game play commences with the reading of a story that describes the mystery. Players are told that each story comes from a real life case. Player tokens are placed on a game board and five location cards are placed on the board to make its appearance resemble locations described in the story (Figure 3). Face cards and character cards are arranged in piles (Figure 4) according to the five character categories (i.e., Perpetrator, Victim, etc.). Face cards and character cards are shuffled within each category. Players take turns picking a face-character pair for each of the five categories. These selections are noted on answer keys and placed in envelopes. For each round of play, players will roll a 4-sided die and move a token on a game board to one of the locations along the shortest path possible. Locations are spaced far enough apart so that, on average, it takes 10 turns to get from location to location. During each round of play, five face cards and five character cards are flipped over for each player to reveal the faces and character information. Then, a clue card is flipped over. Clue cards ask one “yes-no” question about a character type (e.g., Victim) using the context of the story, and that question is meant to reveal something about the character’s four traits (“what,” “where,” “why,” and “when”). For example, a question intended to reveal information about the Victim might read “Was the victim seen in his bedroom between 9pm and midnight on the night of the crime?” This question is designed to get at “when” information for the character. Character cards all have checkboxes to indicate yes or no for the four traits. If the “when” box is checked in this example, the player should conclude that the character was present during the crime, and this character might be the Victim. For each round of play, a new set of cards is revealed beneath the previous set. After the first round of play, players can switch any set of cards from one category with another set from the same category and hazard a guess. The opponent indicates whether the guess is correct with a simple “yes” or “no,” and how many are correct without revealing which ones are correct. When a player lands in one of the five locations on the board, they must Report to the Chief Inspector. During the report, they must stack all the cards from previous rounds of play, making them inaccessible for swapping. Statistically, this should happen about every 10 rounds of play, and thus players will do this four times during the game. The final guess is made after 40 rounds of play and after the player has visited the final location. If you’re still reading this, welcome to the flaming helicopter crash that is experimental game design.

While the game could be played only with character cards, the inclusion of face cards allow us to expose the player’s bias. While each character within a race-gender classification has a unique character card, there may be characters from other races or genders with a similar card. Eventually, players have to start making judgments about faces in addition to character traits, and that is how we intend to expose racial bias. While judgements of character cards are explicit, judgments of face cards are implicit. The distribution of faces, clues, and character traits had to be carefully balanced within each character category. Within each category (e.g., Perpetrator), there are 40 face cards and 40 character cards that correspond to each round of play. 20 of the faces are male. Within that group, there are four members from each of the five racial groups. Each of those four faces is paired with a unique character card.

While the experiment and the game mechanic are in far better shape, there are many things that could be improved. There are no tangible resources to manage in the game. Nevertheless, not every game requires physical resources. And managing resources might actually make it easier to keep track of the characters, thus making the game more about record keeping. Similarly, writing down clues might make the game too easy. Consequently, the one boundary that we have imposed on players is that they are not allowed to take notes. More important, however, is that the game lacks a method for controlling flow. I’m concerned that the game will either be too easy or too difficult. Only play testing will determine whether that’s the case. Finally, in writing this post, I realized that it should be possible to combine character and face cards, but I’m forgetting why I didn’t do that in the first place. Why do I have a sense of dread? What am I doing in this helicopter, and where are we going?

**I use the term “race” to indicate the socio-cultural group or ethnicity that the player identifies with.

Hilliar, K. F. and Kemp, R. I. (2008) Perception, vol. 37, pp 1605-1608.

You must be logged in to post a comment.